Les réseaux convolutifs#

Un réseau convolutionnel est un réseau de neurones. Une succession de couches de convolution et de pooling.

Convolution#

- Nombre de filtres K

- Taille des filtres F

- Le pas S

- Le zero-padding

Pooling#

- Taille des cellules F

- Le pas S

BatchNormalization#

Importation#

Hyperparamètres#

WIDTH = 150

HEIGHT = 150

NUM_CHANNELS = 3

VALIDATION_SPLIT = 0.2

BATCH_SIZE = 32

LEARNING_RATE = 1e-4

EPOCHS = 10

Télécharger les données#

Préparer les données#

generator = keras.preprocessing.image.ImageDataGenerator(

rescale=1./255,

validation_split=VALIDATION_SPLIT

)

train_set = generator.flow_from_directory(

DATA_DIR,

target_size=(WIDTH, HEIGHT),

batch_size=BATCH_SIZE,

class_mode='categorical',

subset='training',

shuffle=True

)

val_set = generator.flow_from_directory(

DATA_DIR,

target_size=(WIDTH, HEIGHT),

batch_size=BATCH_SIZE,

class_mode='categorical',

subset='validation'

)

num_classes = len(train_set.class_indices)

print("Total classes:", num_classes)

Conception du modèle#

model = keras.Sequential([

keras.Input(shape=(WIDTH, HEIGHT, NUM_CHANNELS)),

keras.layers.Conv2D(64, (5,5), activation=tf.nn.relu),

keras.layers.Conv2D(64, (5,5), activation=tf.nn.relu),

keras.layers.MaxPooling2D(2, 2),

keras.layers.Conv2D(64, (5,5), activation=tf.nn.relu),

keras.layers.Conv2D(64, (5,5), activation=tf.nn.relu),

keras.layers.MaxPooling2D(2, 2),

keras.layers.Conv2D(64, (5,5), activation=tf.nn.relu),

keras.layers.Conv2D(64, (5,5), activation=tf.nn.relu),

keras.layers.MaxPooling2D(2, 2),

keras.layers.Flatten(),

keras.layers.Dense(128, activation=tf.nn.relu),

keras.layers.Dense(num_classes, activation=tf.nn.softmax)

])

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 146, 146, 64) 4864

_________________________________________________________________

conv2d_1 (Conv2D) (None, 142, 142, 64) 102464

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 71, 71, 64) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 67, 67, 64) 102464

_________________________________________________________________

conv2d_3 (Conv2D) (None, 63, 63, 64) 102464

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 31, 31, 64) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 27, 27, 64) 102464

_________________________________________________________________

conv2d_5 (Conv2D) (None, 23, 23, 64) 102464

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 11, 11, 64) 0

_________________________________________________________________

flatten (Flatten) (None, 7744) 0

_________________________________________________________________

dense (Dense) (None, 128) 991360

_________________________________________________________________

dense_1 (Dense) (None, 5) 645

=================================================================

Total params: 1,509,189

Trainable params: 1,509,189

Non-trainable params: 0

_________________________________________________________________

Paramétrage de l'apprentissage#

model.compile(loss="categorical_crossentropy",

optimizer=keras.optimizers.RMSprop(learning_rate=LEARNING_RATE),

metrics=["accuracy"])

Entraînement#

Reporting#

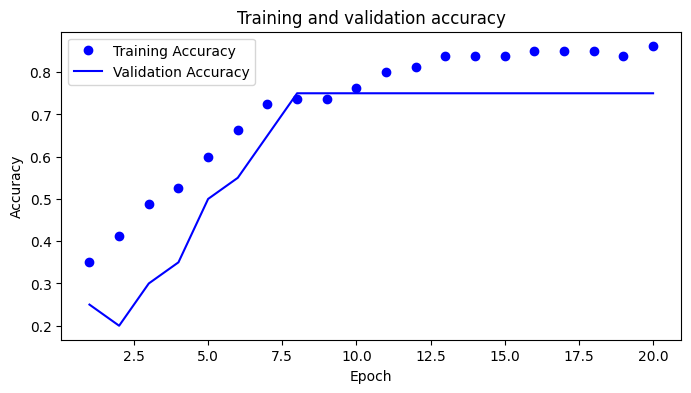

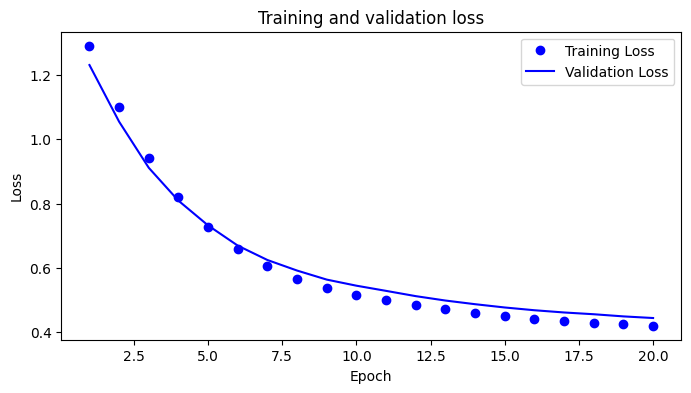

Après l'entraînement il est important de visualiser la courbe des métriques

import matplotlib.pyplot as plt

acc = history.history['accuracy']

loss = history.history['loss']

val_acc = history.history['val_accuracy']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

plt.figure(figsize=(8, 4))

plt.plot(epochs, acc, 'bo', label='Training Accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.title('Training and validation accuracy')

plt.legend(loc='best')

plt.show()

plt.figure(figsize=(8, 4))

plt.plot(epochs, loss, 'bo', label='Training Loss')

plt.plot(epochs, val_loss, 'b', label='Validation Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('Training and validation loss')

plt.legend(loc='best')

plt.show()

Ces courbes permettent de savoir si le modèle génralise ou pas.